In our previous posts, we looked into various aspects of using Qt in a telemetry scenario. Part one focused on reducing message overhead, while part two was about serialization.

To demonstrate a typical IoT scenario, we used MQTT as a protocol and the Qt MQTT module available in Qt for Automation. However, the landscape of protocols in the automation world is bigger and different protocols provide different advantages, usually with a cost involved.

A well-known weakness of MQTT is that it relies on a server instance. This implies that all nodes talk to one central place. This can easily become a communication bottleneck. For MQTT version 3.1.1 many broker providers implemented their own solutions to tackle this issue, and to some extent, this got taken care of in MQTT version 5. Those solutions do add additional servers, which sync with each other, but do not remove the need for a server completely.

One prominent protocol, which allows for server-less communication is the Data Distribution Service (DDS). DDS is a standard available via the Object Management Group, a full description is available on their website.

In addition to the D2D communication capabilities, DDS includes a very interesting design approach, which is data-centric development. The idea behind data centricity is that you as a developer would not need to care about how data is transferred and/or synced between nodes, the protocol handles all of this in the background. While this is a convenience, optimizations like in our previous posts are possible in a limited fashion only.

Qt does not provide a module integration for DDS. But after all, existing implementations are available written in C++ and consequently using both technologies in a project is doable. Following, we will go through the steps to create a Data-Centric Publish-Subscribe (DCPS) application with DDS.

In this example we are going to use the DDS implementation by RTI, which has the highest market adoption currently. Nevertheless, a couple of alternatives do exist like Vortex OpenSplice or OpenDDS. The design principles stay the same for any of those products.

To be able to sync data of the same type on all ends, a design pattern in form of a IDL language is required. Similar to protobuf, the sensor design is the following

/* Struct to describe sensor */

struct sensor_information {

string ID; //@key

double ambientTemperature;

double objectTemperature;

double accelerometerX;

double accelerometerY;

double accelerometerZ;

double altitude;

double light;

double humidity;

};

To convert the IDL to source code, a tool called rtiddsgen is invoked during the build process. To integrate it into qmake, an extra compiler step is required.

RTIDDS_IDL = ../common/sensor.idl

ddsgen.output = $${OUT_PWD}/${QMAKE_FILE_IN_BASE}.cxx # Additionally created files get their own rule

ddsgen.variable_out = GENERATED_SOURCES

ddsgen.input = RTIDDS_IDL

ddsgen.commands = $${RTIDDS_PREFIX}\\bin\\rtiddsgen -language c++ -d $${OUT_PWD} ${QMAKE_FILE_NAME}

QMAKE_EXTRA_COMPILERS += ddsgen

Rtiddsgen generates more than one source and one header file. For each IDL (here sensor.idl), those additional files are created

- Sensor.cxx / .h

- SensorPlugin.ccxx / .h

- SensorSupport.cxx / .h

Especially the source files need to become part of the project. Otherwise, they will not get compiled and you will recognize missing symbols in the linking phase.

Even more, adding extra compiler steps implement a clean step to remove generated files again. If not all files are removed properly before reinvoking rtiddsgen, the tool does not generate correct code anymore and causes various compile errors.

To fix this, one additional compile step is created for each file generated. Those are

ddsheadergen.output = $${OUT_PWD}/${QMAKE_FILE_IN_BASE}.h

ddsheadergen.variable_out = GENERATED_FILES

ddsheadergen.input = RTIDDS_IDL

ddsheadergen.depends = $${OUT_PWD}/${QMAKE_FILE_IN_BASE}.cxx

ddsheadergen.commands = echo "Additional Header: ${QMAKE_FILE_NAME}"

ddsplugingen.output = $${OUT_PWD}/${QMAKE_FILE_IN_BASE}Plugin.cxx

ddsplugingen.variable_out = GENERATED_SOURCES

ddsplugingen.input = RTIDDS_IDL

ddsplugingen.depends = $${OUT_PWD}/${QMAKE_FILE_IN_BASE}.cxx # Depend on the output of rtiddsgen

ddsplugingen.commands = echo "Additional Source(Plugin): ${QMAKE_FILE_NAME}"

ddspluginheadergen.output = $${OUT_PWD}/${QMAKE_FILE_IN_BASE}Plugin.h

ddspluginheadergen.variable_out = GENERATED_FILES

ddspluginheadergen.input = RTIDDS_IDL

ddspluginheadergen.depends = $${OUT_PWD}/${QMAKE_FILE_IN_BASE}.cxx

ddspluginheadergen.commands = echo "Additional Header(Plugin): ${QMAKE_FILE_NAME}"

ddssupportgen.output = $${OUT_PWD}/${QMAKE_FILE_IN_BASE}Support.cxx

ddssupportgen.variable_out = GENERATED_SOURCES

ddssupportgen.input = RTIDDS_IDL

ddssupportgen.depends = $${OUT_PWD}/${QMAKE_FILE_IN_BASE}.cxx # Depend on the output of rtiddsgen

ddssupportgen.commands = echo "Additional Source(Support): ${QMAKE_FILE_NAME}"

ddssupportheadergen.output = $${OUT_PWD}/${QMAKE_FILE_IN_BASE}Support.h

ddssupportheadergen.variable_out = GENERATED_FILES

ddssupportheadergen.input = RTIDDS_IDL

ddssupportheadergen.depends = $${OUT_PWD}/${QMAKE_FILE_IN_BASE}.cxx

ddssupportheadergen.commands = echo "Additional Header(Support): ${QMAKE_FILE_NAME}"

QMAKE_EXTRA_COMPILERS += ddsgen ddsheadergen ddsplugingen ddspluginheadergen ddssupportgen ddssupportheadergen

Those compile steps do nothing but write the filename which has been generated in the previous step, but allow for proper cleanup. Note that setting the dependencies of the steps correctly is important. Otherwise, qmake might invoke the steps in the wrong order and try to compile a not-yet-generated source file.

Moving on to C++ source code, the steps are rather straight-forward, though there are some nuances between creating a publisher and a subscriber.

Generally, each application needs to create a participant. A participant registers itself to the domain and allows communication to all other devices, or the cloud. Next, a participant creates a topic allowing data transmission via a dedicated channel. Participants, who are not registered to the topic will not receive messages. This allows for filtering and reducing data transfer.

Following a publisher is created, which then again creates a datawriter. Quoting from the standard: “A Publisher is an object responsible for data distribution. It may publish data of different data types. A DataWriter acts as a typed accessor to a publisher.” Beforehand, this applies to subscribers as well.

const DDS_DomainId_t domainId = 0;

DDSDomainParticipant *participant = nullptr;

participant = DDSDomainParticipantFactory::get_instance()->create_participant(domainId,

DDS_PARTICIPANT_QOS_DEFAULT,

NULL,

DDS_STATUS_MASK_NONE);

if (!participant) {

qDebug() << "Could not create participant.";

return -1;

}

DDSPublisher *publisher = participant->create_publisher(DDS_PUBLISHER_QOS_DEFAULT,

NULL,

DDS_STATUS_MASK_NONE);

if (!publisher) {

qDebug() << "Could not create publisher.";

return -2;

}

const char *typeName = sensor_informationTypeSupport::get_type_name();

DDS_ReturnCode_t ret = sensor_informationTypeSupport::register_type(participant, typeName);

if (ret != DDS_RETCODE_OK) {

qDebug() << "Could not register type.";

return -3;

}

DDSTopic *topic = participant->create_topic("Sensor Information",

typeName,

DDS_TOPIC_QOS_DEFAULT,

NULL,

DDS_STATUS_MASK_NONE);

if (!topic) {

qDebug() << "Could not create topic.";

return -4;

}

DDSDataWriter *writer = publisher->create_datawriter(topic,

DDS_DATAWRITER_QOS_DEFAULT,

NULL,

DDS_STATUS_MASK_NONE);

if (!writer) {

qDebug() << "Could not create writer.";

return -5;

}

The writer object is a generic so far. We aim to have a data writer specific to the sensor information we created with the IDL. The sensorSupport.h header provides a method declaration to do exactly this

sensor_informationDataWriter *sensorWriter = sensor_informationDataWriter::narrow(writer);

To create a sensor data object, we also use the support methods

sensor_information *sensorInformation = sensor_informationTypeSupport::create_data();

If a sensorInformation instance is now supposed to publish its content, this is achieved with a call to write()

ret = sensorWriter->write(*sensorInformation, sensorHandle);

After this call, the DDS framework takes care of publishing the object to all other subscribers.

For creating a subscriber, most steps are the same as for a publisher. But instead of using a narrowed DDSDataReader, RTI provides listeners, which allow to receive data via a callback pattern. A listener needs to be passed to the subscriber when creating a data reader

ReaderListener *listener = new ReaderListener(); DDSDataReader *reader = subscriber->create_datareader(topic, DDS_DATAREADER_QOS_DEFAULT, listener, DDS_LIVELINESS_CHANGED_STATUS | DDS_DATA_AVAILABLE_STATUS);

The ReaderListener class looks like this

class ReaderListener : public DDSDataReaderListener {

public:

ReaderListener() : DDSDataReaderListener()

{

qDebug() << Q_FUNC_INFO;

}

void on_requested_deadline_missed(DDSDataReader *, const DDS_RequestedDeadlineMissedStatus &) override

{

qDebug() << Q_FUNC_INFO;

}

void on_requested_incompatible_qos(DDSDataReader *, const DDS_RequestedIncompatibleQosStatus &) override

{

qDebug() << Q_FUNC_INFO;

}

void on_sample_rejected(DDSDataReader *, const DDS_SampleRejectedStatus &) override

{

qDebug() << Q_FUNC_INFO;

}

void on_liveliness_changed(DDSDataReader *, const DDS_LivelinessChangedStatus &status) override

{

// Liveliness only reports availability, not the initial state of a sensor

// Follow up changes are reported to on_data_available

qDebug() << Q_FUNC_INFO << status.alive_count;

}

void on_sample_lost(DDSDataReader *, const DDS_SampleLostStatus &) override

{

qDebug() << Q_FUNC_INFO;

}

void on_subscription_matched(DDSDataReader *, const DDS_SubscriptionMatchedStatus &) override

{

qDebug() << Q_FUNC_INFO;

}

void on_data_available(DDSDataReader* reader) override;

};

As you can see the listener is able to report a lot of information. In our example, though, we are mostly interested in the receival part of data, trusting that the other parts are doing fine.

On_data_available has a DDSDataReader argument, which will be narrowed now. The reader provides a method called take, which passes all available data updates to the invoker. Available data is formatted in sequences, specifically sensor_informationSeq.

sensor_informationSeq data;

DDS_SampleInfoSeq info;

DDS_ReturnCode_t ret = sensorReader->take(

data, info, DDS_LENGTH_UNLIMITED,

DDS_ANY_SAMPLE_STATE, DDS_ANY_VIEW_STATE, DDS_ANY_INSTANCE_STATE);

if (ret == DDS_RETCODE_NO_DATA) {

qDebug() << "No data, continue...";

return;

} else if (ret != DDS_RETCODE_OK) {

qDebug() << "Could not receive data:" << ret;

return;

}

for (int i = 0; i < data.length(); ++i) {

if (info[i].valid_data) {

qDebug() << data[i];

} else {

qDebug() << "Received Metadata on:" << i;

}

}

Note that the sequences contain all updates to all sensors. We are not filtering to one specific sensor.

Once we are done with processing the data, we have to return it to the reader.

ret = sensorReader->return_loan(data, info);

One optimization capability in DDS is to use zero-copy data. This implies, that the internal representation of the data is passed to the developer to reduce creating copies. That can be important when the data size is big.

Again, the source is located here. When running the application, it is important to note that the NDDSHOME environment variable needs to be specified to create a virtual mesh network for experimentation.

As a last step, we want to integrate the publisher with a QML application. This demo is also available in the repository.

![dds_qml_screenshot]()

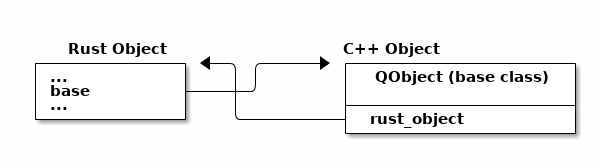

We are going to re-use the principles from our protobuf examples in the previous post of this series. Basically, we create a SensorInformation class, which holds a sensor_information member being created from DDS.

class SensorInformation : public QObject

{

Q_OBJECT

Q_PROPERTY(double ambientTemperature READ ambientTemperature WRITE setAmbientTemperature NOTIFY ambientTemperatureChanged)

Q_PROPERTY(double objectTemperature READ objectTemperature WRITE setObjectTemperature NOTIFY objectTemperatureChanged)

[…]

void init();

void sync();

private:

sensor_information *m_info;

sensor_informationDataWriter *m_sensorWriter;

DDS_InstanceHandle_t m_handle;

QString m_id;

};

In this very basic example, init() will initialize the participant, publisher and datawriter similar to the steps described above.

We added a function sync(), which will sync to the current data state to all subscribers.

void SensorInformation::sync()

{

DDS_ReturnCode_t ret = m_sensorWriter->write(*m_info, m_handle);

if (ret != DDS_RETCODE_OK) {

qDebug() << "Could not write data.";

}

}

Sync() is invoked whenever a property changes, for instance

void SensorInformation::setAmbientTemperature(double ambientTemperature)

{

if (qFuzzyCompare(m_info->ambientTemperature, ambientTemperature))

return;

m_info->ambientTemperature = ambientTemperature;

emit ambientTemperatureChanged(ambientTemperature);

sync();

}

And that is all which needs to be done to integrate DDS into a Qt application. For the subscriber part, we could use the callback based listeners and update the objects accordingly. This is an exercise left for the reader.

While experimenting with DDS and Qt a couple of ideas came up. For instance, a QObject-based declaration could be parsed and for all properties, a matching IDL would be generated. That IDL would automatically be integrated and source code templates be created for the declaration, both publisher and subscriber. That would allow to use Qt only and integrate an IoT syncing mechanism out of the box, DDS in this case. What would you think about such an approach?

To summarize, the IoT world is full of different protocols and standards. Each has their pros and cons, and Qt cannot (and will not) provide an implementation for all of them. Recently, we have been focusing on MQTT and OPC UA (another blog post on this topic will come soon). But DDS is another good example of a technology used in the field. What we wanted to highlight with this post is that it is always possible to integrate other C++ technologies into Qt and use both side by side, sometimes even benefitting each other.

The post Building a Bridge from Qt to DDS appeared first on Qt Blog.

Giuseppe D’Angelo will be giving a

Giuseppe D’Angelo will be giving a  Yes, folks. This year

Yes, folks. This year  KDAB is a major sponsor of this event and a key independent contributor to Qt as our

KDAB is a major sponsor of this event and a key independent contributor to Qt as our